Scaling Applications in AWS

April 25, 2020 / Bryan ReynoldsCloud platforms typically provide users with access to virtual machines (VMs), rather than physical servers. This architecture allows the platform to quickly allocate computer resources such as storage, memory, and processing capability based on demand. Amazon Web Services (AWS) offers a particularly large number of ways for users to configuring scaling on their platform, allowing them to find the best balance between cost and available resources. The scaling options available on AWS make it advisable for users to first develop an overall strategy before configuring their environment.

AWS Overview

AWS is a subsidiary of Amazon that provides cloud-computing platforms and application programmer interfaces (APIs) to its users, which include individuals, organizations, and governments. It allocates resources on-demand, and users are charged on a pay-as-you-go basis for those resources. Amazon promotes AWS as a way to obtain large-scale computing capacity faster and more cheaply than building and maintaining physical servers. AWS currently dominates the cloud-computing market and has a significantly larger market share than any of its competitors.

AWS uses the REST architecture and is accessible through HTTP. Amazon implements and maintains this technology on data centers throughout the world, each of which is responsible for its own geographic region. For example, AWS currently has six data centers in North America. Users can obtain clusters of PMs or dedicated physical computers, which Amazon is responsible for keeping secure.

Many discrete services are included in AWS, more than 212 as of 2020. These services collectively provide the building blocks and tools needed to develop a cloud infrastructure. Most of these services aren’t exposed to the end-users and provide their functionality through APIs instead. Developers then use these APIs in their applications to access the service. Older APIs use the SOAP protocol, while newer ones use JSON. These services incur additional charges based on usage, although each service measures usage in different ways.

AWS services provide a wide range of functions, including the following:

Analytics

Applications

Computing

Database

Deployment

Internet of Things (IoT)

Management

Mobile support

Networking

Storage

Development

Amazon Elastic Compute Cloud (EC2) is one of the most important AWS services and is used by virtually all subscribers. It provides users with access to VMs, which is software that emulates a physical computer. It includes all the resources. A computing system requires, including processing, memory, and storage. EC2 also provides a choice of operating systems (OSs), preloaded software, and networking capabilities. Amazon Simple Storage Service (Amazon S3) is also a widely used AWS service, which provides storage functions for subscribers.

Scalability

Scalability is a computing resource’s ability to handle changes in demand for that resource. Many businesses experience dramatic changes in the demand for their products and services, which may be due to a variety of factors such as seasonal changes, new projects, and growth of the business. Scalability is therefore one of the most desirable benefits of cloud computing since it allows businesses to only pay for the services they actually use. In contrast, organizations with a physical infrastructure must ensure it can handle their peak demand at all times.

Types

The specific methods of scaling in the cloud include vertical scaling, horizontal scaling, and diagonal scaling.

Vertical Scaling

A hotel provides a common analogy for vertical scaling. This hotel has a fixed number of rooms that guests are constantly moving in and out of. They can easily do this as long as there are rooms available to accommodate them. However, the hotel won’t be able to take any more guests if all of its rooms are already occupied.

Vertical scaling in computing functions in a similar manner. You can add resources to a VM as needed, provided the physical machine has the capacity to accept those resources. These resources can also be easily removed once there is no longer a demand for them. While the physical machine has an ultimate limit on the resources it can provide, vertical scaling is an effective way of increasing the capabilities of a VM.

Horizontal Scaling

A two-lane highway provides a good illustration of horizontal scaling. Assume the cars can initially travel easily in both directions without any problems. However, traffic increases as buildings are constructed along the highway, attracting more people. The two-lane highway eventually becomes more crowded to the point that traffic begins to slow. More lanes can then be added to the highway, restoring the traffic flow to its unimpeded level. While this strategy requires considerable time and expense, it does solve the problem.

Horizontal scaling in the context of computing involves adding servers to your network instead of just resources, as is the case with vertical scaling. It also requires more time than horizontal scaling, but it allows you to connect servers together. Horizontal scaling is thus useful for handling traffic efficiently under concurrent workloads.

Diagonal Scaling

Diagonal scaling is a combination of vertical and horizontal scaling, which is most efficient method of scaling infrastructure. It generally consists of scaling vertically first until you reach the capacity of your existing server. Once this occurs, you can use horizontal scaling by cloning that server and continue vertical scaling with the cloned server.

Estimating Requirements

Scaling in the cloud provides enormous flexibility, which saves time and money. An increase in demand is easy to accommodate by simply adding the resources needed to handle the new load. When demand drops off, scaling down is just as easy. This capability is financially beneficial because of the pay-as-you-go model that cloud platforms use.

An organization no longer needs to guess the maximum capacity of its infrastructure and purchase the needed equipment upfront, as is the case for a physical data center. Underestimating this capacity means you won’t have the resources you need while overestimating means paying for resources you don’t need. Scaling in the cloud allows you to simply pay for the resources you need right now and expand only as needed. Your infrastructure never gets overloaded, and the cloud provider is responsible for managing the physical servers.

AWS Auto Scaling

AWS Auto Scaling is an AWS service that lets you scale multiple resources safely and easily, so you can optimize your applications’ performance. It simplifies this process by scaling groups of related resources at the same time. Amazon CloudWatch alarms and metrics enable AWS Auto Scaling features. There’s no additional charge for Auto Scaling beyond those needed for CloudWatch and the other resources that you use when scaling.

Auto Scaling allows you to ensure your scaling policies are consistent across the entire infrastructure that supports your application. Once it’s properly configured, Auto Scaling automatically scales your resources as needed to implement your scaling strategy, ensuring you only pay for the resources you actually need. The Auto Scaling console allows you to use the scaling features of multiple services from a single interface. You can configure scaling for all the resources your application needs in a few minutes, whether they’re individual resources or entire applications.

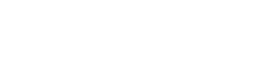

The figure below outlines the process for implementing Auto Scaling. Once you obtain access to Auto Scaling, you examine your applications to determine what you can scale. You then choose whether you want to optimize by cost or performance. After that, you only need to track scaling as it occurs.

Figure 1: Workflow for AWS Auto Scaling

Resources

Auto Scaling works for a variety of AWS resources, including Amazon EC2 auto scaling groups and spot fleets. It’s also supported by Amazon Elastic Container Service (ECS) services, although Auto Scaling can’t discover them via resource tags. Additional resources that you can auto scale in AWS include Amazon DynamoDB’s throughput capacity and Amazon Aurora replicas.

Benefits

The automatic maintenance of scaling performance is one of the most significant benefits of Auto Scaling since it continually monitors the utilization of the resources supporting your applications to ensure they provide the desired performance. Auto Scaling automatically increases capacity when a resource becomes constrained, allowing your application to maintain its desired performance when demand spikes.

Auto Scaling helps you anticipate your operating costs and avoid overspending by optimizing your resource utilization for cost. This setting ensures you only pay for resources you need since Auto Scaling automatically removes resource capacity that’s no longer needed after demand drops.

Auto Scaling lets you develop strategies for selecting the best scaling balance for your applications that are both effective and easy to understand. It also creates policies from those strategies sets utilization targets based on your scaling preferences.

You can also use Auto Scaling to monitor the average utilization of your scalable resources, allowing you to easily define target levels for each resource group. Furthermore, you can automate these groups’ responses to changes in resource demand.

Another benefit of AWS Auto Scaling is the ability to optimize the balance between cost and performance for your applications as shown in the figure below:

Figure 2: Balanced Scaling

Auto Scaling also allows you to quickly configure unified scaling for all your application’s scalable resources.

Strategies

A scaling plan is essential for effectively configuring and managing the scaling of resources with Auto Scaling. This plan can use both dynamic and predictive scaling to manage resources, ensuring that additional resources are added to handle an increased workload and remove them when no longer required. In addition to optimizing your resource utilization between cost and availability, you can also create custom scaling strategies based on the metrics and thresholds you define.

Your scaling plan includes a set of instructions for scaling resources, which you can configure. You can use a separate scaling plan to manage the resources of individual applications, provided you add the appropriate tags to those resources or use AWS CloudFormation. This service helps you configure your resources, allowing you to spend more time managing your applications. Auto Scaling provides recommendations for scaling strategies based on each resource. Once you complete your strategy, it also combines scaling methods to support it.

Dynamic Scaling

Dynamic scaling creates scaling policies for your resources based on tracking utilization targets. These policies adjust your resource capacities in response to real-time changes in resource utilization. The purpose of dynamic scaling is to provide the minimum capacity needed to maintain the utilization at the level specified by the scaling strategy. This approach is similar to setting the thermostat in your home to a specific temperature. Once you select the setting you desire, the thermostat maintains your home at that temperature.

The figure below illustrates the effect of dynamic scaling on utilization. Without dynamic scaling, capacity remains constant while utilization reflects changes in demand. With dynamic scaling, capacity changes in response to demand, keeping the utilization rate nearly constant.

Figure 3: Dynamic Scaling

Assume for this example of dynamic scaling that you configure your scaling plan to keep the CPU utilization for ECS at 50 percent, meaning 50 percent of the CPU capacity reserved for ECS is in use. When the CPU utilization for ECS rises above 50 percent, the scaling policy is triggered to add CPU capacity to handle the increased load. Similarly, the scaling policy removes that extra capacity when utilization drops below 50 percent.

Predictive Scaling

Predictive scaling adjusts capacity based on historic traffic patterns, which it uses to schedule future changes in the number of EC2 instances to handle changes in demand. This technique uses machine learning (ML) to identify daily and weekly patterns in traffic. Predictive scaling allows AWS Auto Scaling to provision EC2 instances before they’re needed, resulting in lower costs.

This technique works with target tracking to ensure changes in EC2 capacity are responsive to expected changes in application traffic. It does this by scheduling the minimum changes needed to accommodate those changes, but it also changes capacity to handle the current traffic. Target tracking monitors utilization levels over a variety of conditions to adjust capacity in response to unexpected fluctuations, especially traffic spikes. Users configure predictive scaling and target tracking together when generating the scaling plan.

The figure below outlines the predictive scaling process, which consists of three stages. The first stage is to analyze the historical load, primarily to identify daily and weekly cycles. The second is to generate a forecast of future load based on the historical analysis. The final stage in predictive scaling is to schedule the capacity changes needed to handle the expected changes.

Figure 4: Predictive Scaling

Assume for this example that you have enabled predictive scaling for an Auto Scaling group and configured it to maintain an average CPU utilization of 50 percent. The forecast predicts a spike in traffic at 8 am every morning, when everyone starts working. The scaling plan then schedules the appropriate scaling action to ensure the group is able to handle the expected workload increase ahead of time. This process occurs continually to keep resource utilization as close to 50 percent as possible at all times.

Additional Scaling Options

In addition to AWS Auto Scaling, other scaling options in AWS include Amazon EC2 Auto Scaling, the Application Auto Scaling API, and scaling for individual services or applications.

EC2 Auto Scaling

EC2 Auto Scaling is one of the most useful scaling options after AWS Auto Scaling. It ensures that the optimum number of instances are available at all times based on the parameters specified in the scaling plan. EC2 Auto Scaling can also determine when one of those instances is in an unhealthy state and terminate. It can then launch another EC2 instance to replace it, thus keeping the number of functioning instances at the desired level. EC2 Auto Scaling provides applications with a high level of availability, cost management, and fault tolerance.

AWS users who want auto scaling often face a choice between AWS Auto Scaling and EC2 Auto Scaling. AWS Auto Scaling is the better option when you need to scale multiple resources across multiple services. This is primarily because it’s faster to configure scaling policies from the AWS Auto Scaling console than managing the scaling policies for each resource through their respective service consoles. AWS Auto Scaling is also easier because it already includes predefined scaling strategies to simplify the development of scaling policies.

AWS is also a better choice than EC2 if you want to use predictive scaling, even when it’s for EC2 resources. Furthermore, AWS Auto Scaling lets you define dynamic scaling policies for resource groups with predefined scaling strategies, including EC2 Auto Scaling groups.

On the other hand, you should use EC2 Auto Scaling when you only need to scale EC2 Auto Scaling groups, or if you only need auto scaling to maintain your EC2 fleet. EC2 Auto Scaling is also a better choice if you need to configure scheduled scaling policies since AWS Auto Scaling only supports target tracking scaling policies. Another reason for using EC2 instead of AWS Auto Scaling is to create or configure EC2 Auto Scaling groups, since you must create and configure these groups outside of AWS Auto Scaling by using methods such as the Auto Scaling API, CloudFormation or the EC2 console.

Application Auto Scaling API

The Application Auto Scaling API is usually the best way to scale non-EC2 resources. This method lets you define scaling policies to scale many AWS resources automatically and schedule scaling actions, including one-time and recurring actions. Application Auto Scaling can scale the following:

Amazon ECS services

Amazon EC2 Spot fleets

Amazon EMR clusters

Amazon AppStream 2.0 fleets

Amazon Aurora Replicas

Amazon SageMaker endpoint variants

In addition, Application Auto Scaling can scale the read and write capacity for Amazon DynamoDB tables and global secondary indexes.

Individual Services

You should create a scaling plan for your application’s resources with AWS Auto Scaling if you want automatic scaling for multiple resources across multiple services. AWS Auto Scaling provides unified scaling and predefined guidance, which makes scaling configuration faster and easier in this scenario. However, you can also scale individual services through their respective consoles as well as the Auto Scaling API and Application Auto Scaling API. This approach is most appropriate for creating scheduled scaling and step scaling policies since AWS Auto Scaling only creates target tracking scaling policies.

Applications

AWS Auto Scaling is generally the best choice for scaling individual applications with variable loads. An ecommerce application is a common example of this scenario, since the volume of online buyers typically fluctuates greatly throughout the day. The standard architecture for ecommerce sites consists of three tiers, including incoming traffic, computing and data processing.

In this case, you should use Elastic Load Balancing to distribute the incoming traffic. Use EC2 to scale the compute layer, with AWS Auto Scaling for the EC2 Auto Scaling groups. DynamoDB should scale the data layer, while AWS Auto Scaling scales the DynamoDB tables.

Other applications with cyclical variations in traffic flow are also appropriate for scaling with AWS Auto Scaling. These commonly include business applications with high traffic during the work day and low traffic at night. Applications that perform batch process, periodic analysis and testing also exhibit this type of traffic pattern. Additional possibilities include marketing campaigns with frequent growth spikes.

Summary

The ability to scale resources in response to changes in workload is one of the most common reasons for migrating to the cloud. However, you must manage scaling effectively to obtain the maximum benefit from this feature. AWS offers multiple methods of automatically scaling resources that provide a high degree of control over your resource allocation, whether it’s for the entire platform or individual applications. The large number of scaling approaches available in AWS also means that you should consider many factors when developing your scaling strategy. Baytech has experienced staff ready to assist you on your next project.